A Roadmap for Getting into Data Science

Everyone Wants to Be In

It’s no news nowadays that folks are flocking towards data science- there are quite many aspiring data scientists around who want to do AI things. I wrote this post as a starter for those beginner data scientists who want to break in. This post does not discuss whether you should, or about the quantum of opportunity in this space, that’s a different topic.

This post also does not give you a big list of links. We try to create a structure of our learning process, and in the end give a few resources.

What is DS/ML/AI?

Definitions are of no help here. There aren’t well-defined boundaries around these terms, no self-evident ground truths that distinguish ML from AI or DS.

Broadly, the field is about learning patterns from data to solve various business problems. Take for instance, price of a house. Traditionally, you could make up basic if-else rules that incorporate business logic to get a value for a house price. With data science you could utilize algorithms to learn these rules and predict these values given the attributes of a house. Similarly, you could

- Predict when a given machine part could fail

- Flag a Credit Card Fraud

- Assess Credit-Worthiness given financial attributes of an individual

Ability to identify complex patterns in a data is useful in almost every domain that has any significant data, and underlying structure in it.

Some Real Use Cases of Data Science

Types of Data - Tabular, Image and Text

The examples cited above are of tabular data. As the name explains, it is data that’s stored in a tabular format. This is the most common data format, and easier to work on as well. But there’s also image data and text data, both are also increasingly forming the proportion of useful industrial models.

- On Pinterest, the images that get recommended to you are based on ML models.

- Facebook, Twitter recommending feeds to you are recommended using ML models as well.

- Face Recognition softwares are used worldwide.

- Chatbots, Translation all use text-based ML models underneath them.

An Overview of Requirements

What do you need to become a data scientist, and what do you do on a day-to-day basis on your job?

I’d divide this into four topics - Technical Skills, Theoretical Knowledge, Domain and Business Knowledge, and Project Management. For a beginner, we’d be only interested in the first two.

Technical Skills

These are divided into parts.

- Software Engineering: You should know how to code.

- Tools Specific to Data Science: Know thy tools!

Theoretical Knowledge

- Theory of Data Science: Learn from the wisdom of ancients!

- Mathematics: Basics will do fine.

Structure Your Machine Learning Journey

Here, we’ll expand on what are these skills, and how do you go about acquiring them, and in what order.

Python: For a beginner, the first step should be to learn how to code. Python is the most used language in this space, and it is great language for a first-time coder to learn. In fact, the ease of coding is exactly the reason why python came to dominate the machine learning landscape. This is a good free resource to start learning.

Basics of the language are enough to get started- you don’t need to leetcode yourself. You do need to get a hang of jupyter notebooks, an IDE like VS-Code, and be able to install packages.

Tabular vs Deep Learning: As a newcomer, you should aim to get proficient in tabular data before you move to tackling image or text datasets.

Tools: Knowing pandas (library that handles tabular data) and matplotlib/seaborn (data visualization library) is another starting step. Knowing basic methods is enough at this stage.

Now that we have warmed up, we’ll step into the main process and discuss the sources to learn from.

The Problem Of Plenty

Where to learn from? There is just so much to choose, so many courses, books, websites, twitter influencers, linkedin influencers, an overflowing bookmarks folder, pdfs of unread papers. The progress is overwhelming too- What’s a standard practice a year ago may not be the same today.

This can be overwhelming for a veteran, let alone a beginner. Separating the noise from the signal can be daunting, as well as finding the best possible source to learn from.

Speaking from experience, what works best for me is learning on the job. Whatever projects I’ve worked on, that knowledge stays with me forever. Courses and Books are tougher to retain. So if you’re employed, give your best in your own project, it’s the best way to upskill.

However, if you’re not employed, or your job is narrow in its scope and you want to upskill, the problem persists. There should be a process to identify and follow the materials. (My personal recommendations are at the end of this post.)

Courses: Instead of sampling and browsing dozens of courses, it is better to simply pick one and get started. The time you waste in finding the perfect course can be better utilized in completing an okay course. Most important thing is to get moving and not be stagnant.

Books: There are some books that are structured as tutorials. Don’t delve deep into theory at this stage, if a book contains code snippets, is a latest copy, and is easy to read - that’s the one.

Websites and Blogs: Keeping browsing medium, towardsdatascience, kdnuggets, etc. Keep it light.

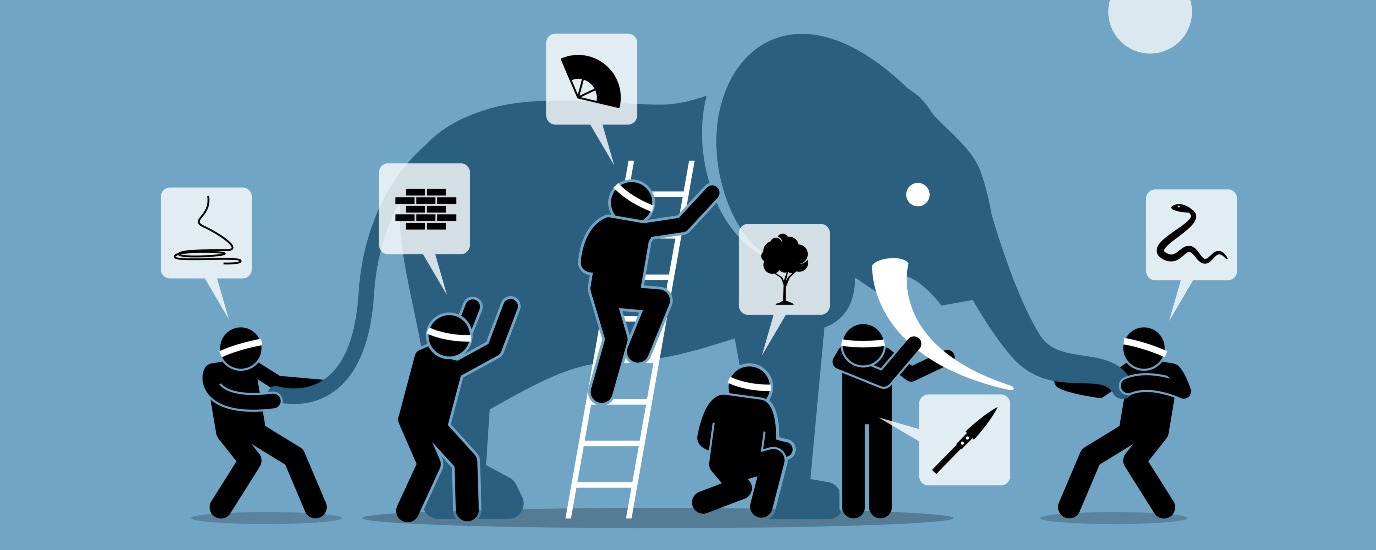

Blind Men and the Elephant

Due to the problem of plenty, it is important to keep things simple. Don’t follow too many threads, pick a few sources and stick with it. Course- pick any one and complete it without distraction, but remember that this is not everything, and it has only shown you a part of the elephant. Much like the parable of the blind men and the elephant, where each of the men describe the elephant differently since they’re feeling a different part of the elephant.

Doing an online course could show you one part of this elephant- the theory behind the many algorithms like linear regression, random forest. There are many helpful books too that can do this. To cover other topics you’ll need other things.

It Can Get Overwhelming

I know that’s a lot of stuff, right? Data science can be overwhelming for a veteran, let alone one who’s just starting their journey. There is so much to learn, from the theory to the latest tools, the latest research, etc. Which is why it is important to remember that it is not necessary to learn everything.

List of Topics

This is a rough outline of how your learning journey could look like. Not exhaustive.

- Python, SQL (Basics)

- Pandas and Matplotlib (Basics)

- Reading and basic operations on dataframes

- Basic plotting

- Data Science Theory

- Supervised vs Unsupervised Learning

- Different ways of representing data (encoding, binning)

- supervised learning algorithms (linear regression, trees)

- unsupervised learning algorithms (clustering, PCA)

- model evaluation and metrics

- overfitting, underfitting

- ensembles, correlation, feature engineering, outliers, regularization

- Practice

- use datasets to create models

- data visualization and EDA

- Full-Fledged Projects

This is a rough outline of the process. My recommended resource to start with is the book Introduction to Machine Learning using Python by Andreas Muller. It is a very easy-to-read book that contains code snippets and gradually introduces all topics. You can also complete the Kaggle Courses at here, they’re quite friendly and brief tutorials, plus you can run them in your browser itself.

This outline doesn’t include deep learning (computer vision or natural language processing), or model deployment.

A Practice-First Discipline

The first two blocks are in place, the coding environment and the theory of data science. DS is a very practice-oriented discipline. Theoretical knowledge constitutes a small proportion of the data scientist mindspace. It is in the dirty trenches of the notebooks where the real are proven.

If your resume contains just a bunch of courses, it does not give an interviewer any idea of your prowess. But having a project, notebooks, repos is a sign that you have been there and done those things that matter the most. What things, you ask?

Reading data, understanding, cleaning, processing, preparing is the single most important aspect of data science that is often not given the necessary important even by those long in the game. These skills cannot be taught in any course or book, but be learned by doing.

Exploratory Data Analysis (EDA) is a term you’ll come across quite often, and it is an art more than a science, another skill that you’ll learn through practice. Understanding the contents of your data, asking it the right questions and making it answer, this is our bread and butter.

Then there are feature engineering, dealing with imbalanced data, model training, model evaluation, all skills which are practical in nature. Fortunately for us, there is a single place to learn all of the above- Kaggle.

Kaggle is a website where you can train machine learning models for free using many datasets available there. (It also hosts competitions, but at this point we are focussed on learning). Simply copy-and-editing someone else’s publicly available notebooks can be incredibly helpful to understand various work flows. Spend your time creating a chimaera of a dozen code blocks from dozen notebooks in a single task, and it will be exponentially helpful from a learning perspective.

Don’t Heed the Kaggle Haters

There has been criticism about Kaggle that it is not helpful for a data science job, since you always get a clean dataset in hand which is never the case in real life. While it is true that real life data is much messier than Kaggle, practicing in Kaggle has much more returns than handicap. You learn to max out your feature engineering skills, data visualization and EDA skills, and are also able to learn how to rapid iterations. Kaggle is helpful without a doubt.

You also get to learn about the latest techniques, libraries, models, you observe empirical evidence on what methods work and what don’t.

Projects

Once you are able to write a kaggle notebook from start to end, you have pretty much achieved the baseline of what you set out to do. So if you’re at this stage, you should sit back and congratulate yourself.

If you want to graduate to the next level, you should start doing full fledged projects that solve a meaningful problem. Here, you can use data that’s not from kaggle for a challenge.

This includes some advanced topics that can be skipped by a beginner, but if you are employed as a data scientist, these would be quite important.

How would this differ from a kaggle project

- work with .py files instead of a notebook

- write comments, docstrings in order to be helpful to the reader

- use sklearn pipelines in order to save the pre-processing steps, making it easier for inference.

- write modular, readable code.

- learn about code-writing guidelines. PEP8. Use black, isort, etc. tools.

- write tests.

Domain Knowledge

This section is not applicable for a complete beginner.

Nobody pays you to fit a model to a csv. The purpose of data scientists, at the end of the day, is to use their skills to add value to the business. This purpose, and that of understanding the data, formulating problems to solve, and evaluating solutions, all require you to have knowledge of the business systems that you work in. This includes industry knowledge, how processes flow inside your own firm, the meaning behind the data, and more. Acquiring this knowledge needs communication skills, and ability to ask the right questions.

Unlike specialists, data scientists also need to rotate over different businesses. If today you are building a recommender system for ecommerce, tomorrow you could be building a failure prediction model for aircraft engines. The variety of domains we operate in is vast, so the ability to quickly onboard is important too.

Deep Learning

Well, we only learned about tabular data! Where’s the cool stuff about neural networks, chat-GPT? This post was supposed to include deep learning topics as well, however the length has gotten too long hence they’ll be covered in a separate post.

However if you need a quick tutorial on them, you can follow the kaggle courses.

Second place to grokk deep learning is reading and running public kernels on kaggle. Third place is the official documentation of libraries like keras.

Deep learning is a very empirical field where practice and theory go hand-in-hand. The traditional serial approach of reading up theory and then taking up examples will not work for deep learning, they have to go together. It is best if, as a beginner, you don’t go too deep into theoretical aspects of deep learning before getting hands-on experience with their applications.

Resources

In order to avoid the problem of plenty, I will keep this list as short as possible.

Book - Introduction to Machine Learning by Andreas Muller. This covers theory and practice at the same time while being beginner friendly.

Course - Most courses like that of Andrew Ng are quite heavy on mathematics and the “backend” of ML algorithms. I personally think this is not a good place to start for a newcomer. However if you have a background in STEM, you might like this approach of thinking from first principles.

Kaggle Learn - Most people know kaggle for building models and competitions. But kaggle also has a courses section where there are easy-to-follow and brief tutorials on various topics like pandas, data visualization, etc. They are fantastic for a beginner.

Kaggle Notebooks - Spending time here will teach you about almost everything about model building.

This is all for this beginner’s notebook. Good luck to your machine learning journey!